Folks have gotten used to Windows 10. Now Microsoft is pulling out the rug with a new version of Windows. When I heard of Windows 11, my first thought was that the disbanded Vista product team had staged an armed coup in Bill Gates’ old office and regained control of Windows. I haven’t installed Windows 11, although grandson Christopher has. He doesn’t like it.

I think Microsoft has something cooking in Windows 11.

Microsoft releases

New releases of Windows are always fraught. Actually, new releases of anything from Microsoft get loads of pushback. Ribbon menu anxiety in Office, the endless handwringing over start menus moving and disappearing in Windows. Buggy releases. It goes on and on.

Having released a few products myself, I sympathize with Microsoft.

Developers versus users

A typical IT system administrator says “Change is evil. What’s not broke, don’t fix. If I can live with a product, it’s not broke.” Most computer users think the same way: “I’ve learned to work with your run down, buggy product. Now, I’m busy working. Quit bothering me.”

Those positions are understandable, but designers and builders see products differently. They continuously scrutinize customers using a product, and then ask how it might work more effectively, what users might want to do that they can’t, how they could become more productive and add new tasks and ways of working to their repertoire.

Designers and builders also are attentive to advances in technology. In computing, we’ve seen yearly near-doubling of available computing resources, instruction execution capacity, storage volume, and network bandwidth. In a word, speed. 2021’s smartphones dwarf super computers from the era when Windows, and its predecessor, DOS, were invented.

No one ever likes a new release

At its birth, Windows was condemned as a flashy eye candy that required then expensive bit-mapped displays and sapped performance with intensive graphics processing. In other words, Windows was a productivity killer and an all-round horrible idea, especially to virtuoso users who had laboriously internalized all the command line tricks of text interfaces. Some developers, including me, for some tasks, still prefer a DOS-like command line to a graphic interface like Windows.

However, Windows, and other graphic interfaces such as X on Unix/Linux, were rapidly adopted as bit-mapped displays proliferated and processing power rose. Today, character-based command line interface are almost always simulated in a graphical interface when paleolithic relics like me use them. Pure character interfaces still are around, but mostly in the tiny LCD screens on printers and kitchen appliances.

Designers and builders envisioned the benefits from newly available hardware and computing capacity and pushed the rest of us forward.

Success comes from building for the future, not doubling down on the past. But until folks share in the vision, they think progress is a step backwards.

Is the Windows 11 start menu a fiasco? Could be. No development team gets everything right, but I’ll give Windows 11 a spin and try not to be prejudiced by my habits.

Weird Windows 11 requirements

Something more is going on with Windows 11. Microsoft is placing hardware requirements on Windows 11 that will prevent a large share of existing Windows 10 installations from upgrading. I always expect to be nudged toward upgraded hardware. Customers who buy new hardware expect to benefit from newer more powerful devices. Requirements to support legacy hardware are an obstacle to exploiting new hardware. Eventually, you have to turn your back on old hardware and move on, leaving some irate customers behind. No developer likes to do this, but eventually, they must or the competition eats them alive.

Microsoft forces Windows 11 installations to be more secure by requiring a higher level of Trusted Platform Module (TPM) support. A TPM is microcontroller that supports several cryptographic security functions that help verify that users and computers are what they appear to be and are not spoofed or tampered with. TPMs are usually implemented as a small physical chip, although they can be implemented virtually with software. Requiring high level TPM support makes sense in our increasing cybersecurity compromised world.

But the Windows 11 requirements seem extreme. As I type this, I am using a ten-year-old laptop running Windows 10. For researching and writing, it’s more than adequate, but it does not meet Microsoft’s stated requirements for Windows 11. I’m disgruntled and I’m not unique in this opinion. Our grandson Christopher has figured out a way to install Windows 11 on some legacy hardware, which is impressive, but way beyond most users and Microsoft could easily cut off this route.

I have an idea where Redmond is going with this. It may be surprising.

Today, the biggest and most general technical step forward in computing is the near universal availability of high capacity network communications channels. Universal high bandwidth Internet access became a widely accepted national necessity when work went online through the pandemic. High capacity 5G cellular wireless network are beginning to roll out. (What passes for 5G now is far beneath the full 5G capacity we will see in the future.) Low earth orbit satellite networks promise to link isolated areas to the network. Ever faster Wi-Fi local area networks offer connectivity anywhere.

This is not fully real. Yet. But it’s close enough that designers and developers must assume it is already present, just like we had to assume bit-mapped displays were everywhere while they were still luxuries.

What does ubiquitous high bandwidth connection mean for the future? More streaming movies? Doubtless, but that’s not news: neighborhood Blockbuster Video stores are already closed.

Thinking it through

In a few years, every computer will have a reliable, high capacity connection to the network. All the time. Phones are already close. In a few years, the connection will be both faster and more reliable than today. That includes every desktop, laptop, tablet, phone, home appliance, vehicle, industrial machine, lamp post, traffic light, and sewer sluice gate. The network will also be populated with computing centers with capacities that will dwarf the already gargantuan capacities available today. Your front door latch may already have access to more data and computing capacity than all of IBM and NASA in 1980.

At the same time, ransomware and other cybercrimes are sucking the life blood from business and threatening national security.

Microsoft lost the war for the smartphone to Google and Apple. How will Windows fit in the hyperconnected world of 2025? Will it even exist? What does Satya Nadella think about when he wakes late in the night?

Windows business plan

The Windows operating system (OS) business plan is already a hold out from the past. IBM, practically the inventor of the operating system, de-emphasized building and selling OSs decades ago. Digital Equipment, DEC, a stellar OS builder, is gone, sunk into HP. Sun Microsystems, another OS innovator, is buried in the murky depths of Oracle. Apple’s operating system is built on Free BSD, an open source Unix variant. Google’s Android is a Linux. Why have all these companies gotten out of or never entered the proprietary OS development business?

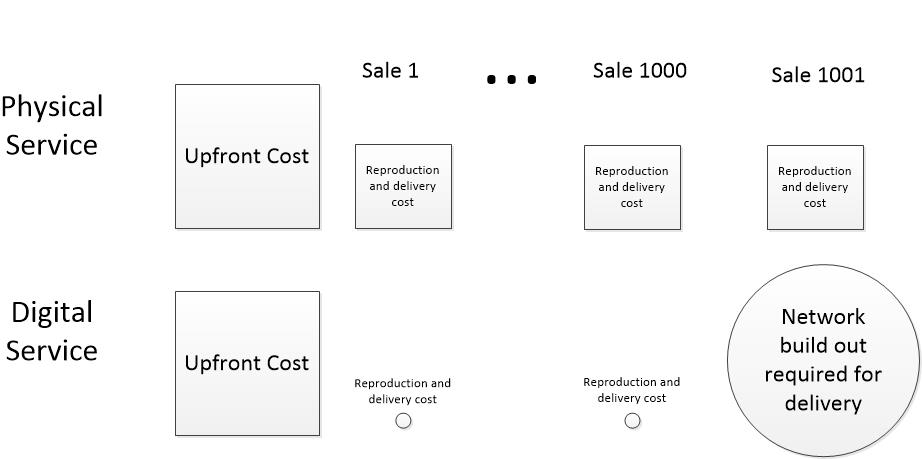

Corporate economics

The answer is simple corporate economics: there’s no money in it. Whoa! you say. Microsoft made tons of money off its flagship product, Windows. The key word is “made” not “makes.” Making money building and selling operating systems was a money machine for Gates and company back in the day, but no longer. Twenty years ago, when Windows ruled, the only competing consumer OS was Apple, which was a niche product in education and some creative sectors. Microsoft pwned the personal desktop in homes and businesses. Every non-Apple computer was another kick to the Microsoft bottom line. No longer. Now, Microsoft’s Windows division has to struggle on many fronts.

Open source OSs— Android, Apple’s BSD, and the many flavors of Linux— are all fully competitive in ease of installation and use. They weren’t in 2000. Now, they are slick, polished systems with features comparable to Windows.

To stay on top, Windows has to out-perform, out-feature, and out secure these formidable competitors. In addition, unlike Apple, part of the Windows business plan is to run on generic hardware. Developing on hardware you don’t control is difficult. The burden of coding to and testing on varying equipment is horrendous. Microsoft can make rules that the hardware is supposed to follow, but in the end, if Windows does not shine on Lenovo, HP, Dell, Acer, and Asus, the Windows business plunges into arctic winter.

With all that, Microsoft is at another tremendous disadvantage. It relies on in house developers cutting proprietary code to advance Windows. Microsoft’s competitors rely on foundations that coordinate independent contributors to opensource code bases. Many of these contributors are on the payrolls of big outfits like IBM, Google, Apple, Oracle, and Facebook.

Rough times

Effectively, these dogs are ganging up on Microsoft. Through the foundations— Linux, Apache, Eclipse, etc.—these corporations cooperate to build basic utilities, like the Linux OS, instead of building them for themselves. This saves a ton of development costs. And, since the code is controlled by the foundation in which they own a stake, they don’t have to worry about a competitor pulling the rug out from under them.

Certainly, many altruistic independent developers contribute to opensource code, but not a line they write gets into key utilities without the scrutiny of the big dogs. From some angles, the opensource foundations are the biggest monopolies in the tech industry. And Windows is out in the cold.

What will Microsoft do? I have no knowledge, but I have a good guess that Microsoft is contemplating a tectonic shift.

Windows will be transformed into a service.

Nope, you say. They’ve tried that. I disagree. I read an article the other day declaring Windows 11 to be the end of Windows As A Service, something that Windows 10 was supposed to be, but failed because Windows 11 is projected for yearly instead of biannual or more frequent updates. Windows 11 has annoyed a lot of early adopters and requires hardware upgrades that a lot of people think are unnecessary. What’s going on?

Windows 10 as a service

The whole idea of Windows 10 as a service was lame. Windows 10 was (and is) an operating system installed on a customer’s box, running on the customer’s processor. The customer retains control of the hardware infrastructure. Microsoft took some additional responsibility for software maintenance with monthly patches, cumulative patches, and regular drops of new features, but that is nowhere near what I call a service.

When I installed Windows 10 on my ancient T410 ThinkPad, I remained responsible for installing applications and adding or removing memory and storage. If I wanted, I could rename the Program Files directory to Slagheap and reconfigure the system to make it work. I moved the Windows system directory to an SSD for a faster boot. And I hit the power switch whenever I feel like it.

Those features may be good or bad.

As a computer and software engineer by choice, I enjoy fiddling with and controlling my own device. Some of the time. My partner Rebecca can tell you what I am like when a machine goes south while I’m on a project that I am hurrying to complete with no time for troubleshooting and fixing. Or my mood when I tried to install a new app six months after I had forgotten the late and sporty night when I renamed the Program Files directory to Slagheap.

At times like those, I wish I had a remote desktop setup, like we had in the antediluvian age when users had dumb terminals on their desks and logged into a multi-user computer like a DEC VAX. A dumb terminal was little more than a remote keyboard with a screen that showed keystrokes as they were entered interlaced with a text stream from the central computer. The old systems had many limitations, but a clear virtue: a user at a terminal was only responsible for what they entered. The sysadmin took care of everything else. Performance, security, backups, and configuration, in theory at least, were system problems, not user concerns.

Twenty-first century

Fast forward to the mid twenty-first century. The modern equivalent of the old multi-user computer is a user with a virtual computer desktop service running in a data center in the cloud, a common set up for remote workers that works remarkably well. For a user, it looks and feels like a personal desktop, except it exists in a data center, not on a private local device. All data and configuration (the way a computer is set up) is stored in the cloud. An employee can access his remote desktop from practically any computing device attached to the network, if they can prove their identity. After they log on, they have access to all their files, documents, processes, and other resources in the state they left them, or in the case of an ongoing process, in the state their process has attained.

What’s a desktop service

From the employees point of view, they can switch devices with abandon. Start working at your kitchen table with a laptop, log out in the midst of composing a document without bothering to save. Not saving is a little risky, but virtual desktops run in data centers where events that might lose a document are much rarer than tripping on a cord, spilling a can of Coke, or the puppy doing the unmentionable at home. In data centers, whole teams of big heads scramble to find ways to shave off a minute of down time a month.

Grab a tablet and head to the barbershop. Continue working on that same document in the state you left it instead of thumbing through old Playboys or Cosmos. Pick up again in the kitchen at home with fancy hair.

Security

Cyber security officers have nightmares about employees storing sensitive information on personal devices that fall into the hands of a competitor or hacker. Employees are easily prohibited from saving anything from their virtual desktop to the local machine where they are working. With reliable and fast network connections everywhere, employees have no reason to save anything privately.

Nor do security officers need to worry about patching vulnerabilities on employee gear. As long as the employee’s credentials are not stored on the employee’s device, which is relatively easy to prevent, there is nothing for a hacker to steal.

The downside

What’s the downside? The network. You have to be connected to work and you don’t want to see swirlies when you are in the middle of something important while data is buffering and rerouted somewhere north of nowhere.

However. All the tea leaves say those issues are on the way to becoming as isolated as the character interface on your electric teapot.

The industry is responding to the notion of Windows as a desktop service. See Windows 365 and a more optimistic take on Win365.

Now think about this for a moment: why not a personal Windows virtual desktop? Would that not solve a ton of problems for Microsoft? With complete control of the Windows operating environment, their testing is greatly simplified. A virtual desktop local client approaches the simplicity of a dumb terminal and could run on embarrassingly modest hardware. Security soars. A process running in a secured data center is not easy to hack. The big hacks of recent months have all been on lackadaisically secured corporate systems, not data centers.

It also solves a problem for me. Do I have to replace my ancient, but beloved, T410? No, provided Microsoft prices personal Windows 365 reasonably, I can switch to Windows 365 and continue on my good old favorite device.

Marv’s note: I made a few tweeks to the post based on Steve Stroh’s comment.